September 08, 2022

Is AI Ready To Replace Product Photography?

Latent diffusion models (DALLE-2, Midjourney, Stable Diffusion) recently broke into the popular zeitgeist in the form of some hilarious segments on talk shows and foreboding debates about what does and doesn’t count as original art.

As a long-time advocate for the promise of content + tech, I’ll freely admit to being a card-carrying fanboy for LDMs and large language models (GPT-3, BLOOM). LLMs are, in a way, the analog of LDMs for written content. I think emerging tech in this area will change everything.

Having said that, the most fun I’ve had on a computer in a very long time has come by way of Stable Diffusion, a latent text-to-image model similar to OpenAI’s DALLE-2, except they’ve publically released their model for all to tinker with. Within days of its release, the community came together and extended its capabilites with all kinds of bells and whistles.

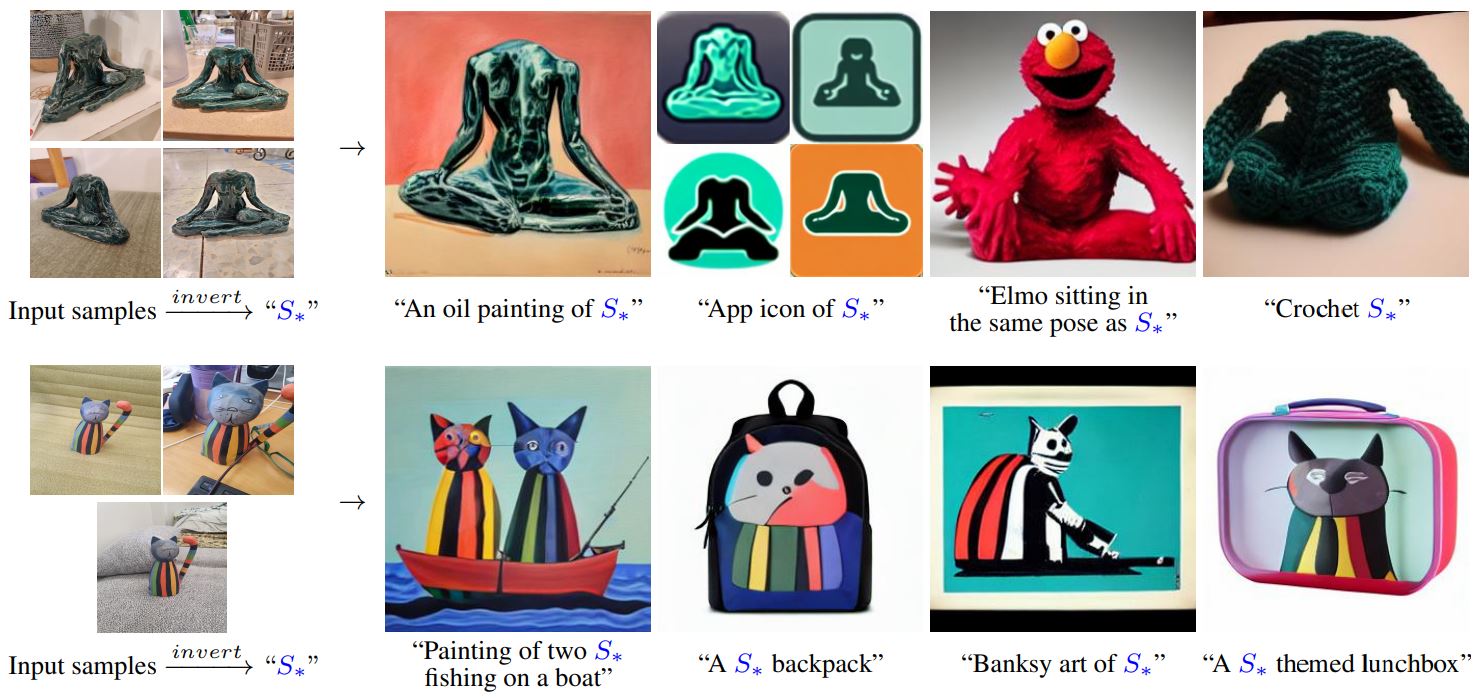

One of those bells and/or whistles is textual inversion, which allows anyone to “personalize” the text-to-image model by adding objects or themes of their own, thereby allowing them to call up those objects when generating images from text.

An obvious application for textual inversion + Stable Diffusion is product photography. How amazing would it be to call up a perfect representation of a product in any number of scenes, settings and themes? I wanted to find out, so I used textual inversion to create my own custom embeddings for Standard Diffusion with the Canary Cap. The results were.. interesting.

On one hand, the model does a poor job of capturing and representing the new object fed into the model (good enough for government work, I guess?) Although the resulting images are at times bizarre, hilarious, and intriguing, none of them actually look enough like the Canary Cap to use in any meaningful way.

On the other hand, WTF? This is AMAZING! To even get an approximation of a one-of-a-kind product with just five training images is impressive. And more impressive is the way the quality of scenes are rendered. While the Canary Cap is a frakenstein, many of the scenes themselves are nearly perfect.

So no, AI isn’t replacing product photography today. But what’s so exciting about the recent developments with LDMs and LLMs is not what they’re able to do today, it’s the line-of-sight they give us to near-term capabilites. Change is coming quickly. And when it does, I’m hoping to shelve my poorly produced Canary Cap product shots 🤞.

If you want to give Stable Diffusion a shot, you can do so for free using this Google Colab notebook.